Experimentation teams today are doing more than ever—but in too many companies, their experimentation reporting strategy is invisible.

The data backs it up:

According to Speero’s 2025 Experimentation Maturity Report, 63% of companies say they encourage testing… but only 47% say the results are actually recognized.

Translation? Most experimentation work is happening in silos, getting lost in spreadsheets, or failing to connect to the priorities leadership cares about. When that happens, the program stalls—no matter how many tests you run.

Visibility Drives Velocity

Invisibility is one of the most underrated blockers in experimentation. When tests aren’t seen, they don’t get support. When results aren’t understood, they don’t get acted on. And when nobody’s sure what success looks like, you end up reporting CTRs to people who only care about revenue.

I’ve seen this happen in companies of every size:

- The dev team blocks a viable test because they don’t understand the business impact.

- Marketing runs a similar experiment to product—because they didn’t know it had already been done.

- The CRO team hits a huge win, but it doesn’t ladder up to any exec KPI—so it quietly fades into backlog purgatory.

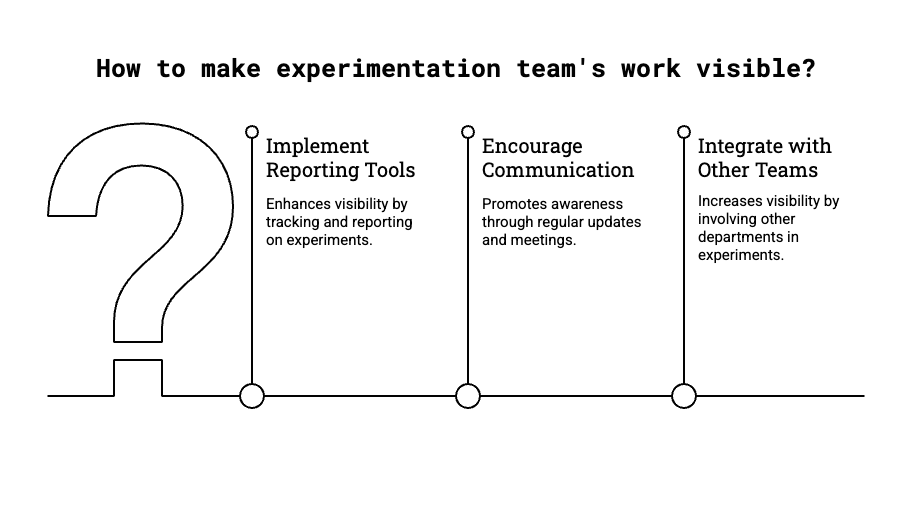

How to Fix It: Build a Better Experimentation Reporting Strategy

Your experimentation program is only as strong as your ability to communicate its impact. That means visibility isn’t just a reporting task—it’s a strategic function. Here’s what’s worked for my team.

- Anchor Everything in Incremental RevenueWhen leadership asks if something “worked,” they don’t want to see engagement rate or bounce—they want to see dollar signs.That’s why my team leads every update with incremental revenue. If you start with that single topline metric, you immediately connect your work to business outcomes. From there, we flex supporting metrics (conversion, CTR, retention, etc.) depending on the audience—but revenue gets you in the door.

“It’s the topline metric that gets attention. It puts a dollar sign on the impact we’re driving.”

– from Dynamic Yield interview

- Tailor the Story for the RoomA product leader, CMO, and brand manager don’t care about the same things—so don’t give them the same slide.When we present results, we adjust the lens:

- Marketing sees how testing improved campaign performance and media efficiency.

- Product sees how it de-risked roadmap decisions.

- Brand sees how we supported storytelling with customer-relevant messaging.

This isn’t about spinning the data—it’s about making the value visible in a way that matters to each team.

- Use Simple Formats That Answer the Right QuestionsWe don’t try to impress people with dashboards. We just answer:

- What did we do?

- Did it work?

- Why does it matter?

Each report and deck follows that format. Every quarterly exec update starts with a one-slide summary of our pacing against annual incremental revenue goals. In between, we run a steady rhythm—biweekly check-ins with brand leaders, monthly reports with performance breakdowns, and ongoing test insights shared cross-functionally.

When people see the results clearly, they start to care. And when they care, support (and resources) follow.

- Make It Easy to Say YesIn experimentation, complexity kills momentum. That’s why we align early with teams like brand, UX, and marketing—not just to “get buy-in,” but to make sure our work supports their goals too.Once other teams feel like testing is a tool that helps them, they become advocates—not roadblocks.

“I’m not there to show off a feature—I’m there to explain why it matters.”

TL;DR: Scaling Starts With Visibility

Without a clear experimentation reporting strategy, even the best wins go unnoticed.

Most experimentation programs don’t fail because of bad ideas. They fail because their best work never gets seen, shared, or supported.

If your program is stalling, take a step back and ask:

- Are we connecting experiments to outcomes leadership cares about?

- Are we communicating results in a way each audience understands?

- Are we making it easy for others to act on what we learn?

If not, the first test you should run might not be on your site—it might be on your reporting.

Want help fixing that? I’ve helped companies turn hidden experimentation work into executive-level momentum.

Ready to go deeper?

If this article hit home, here are two that take the conversation further:

Break the Experimentation Catch-22: Build an Experimentation Strategy That Gets Buy-In and Results

Personalization is supposed to make things easier for shoppers. But too often, it becomes a battle of retailer vs shopper